728x90

반응형

https://dacon.io/competitions/official/235805/codeshare/3596?page=1&dtype=recent

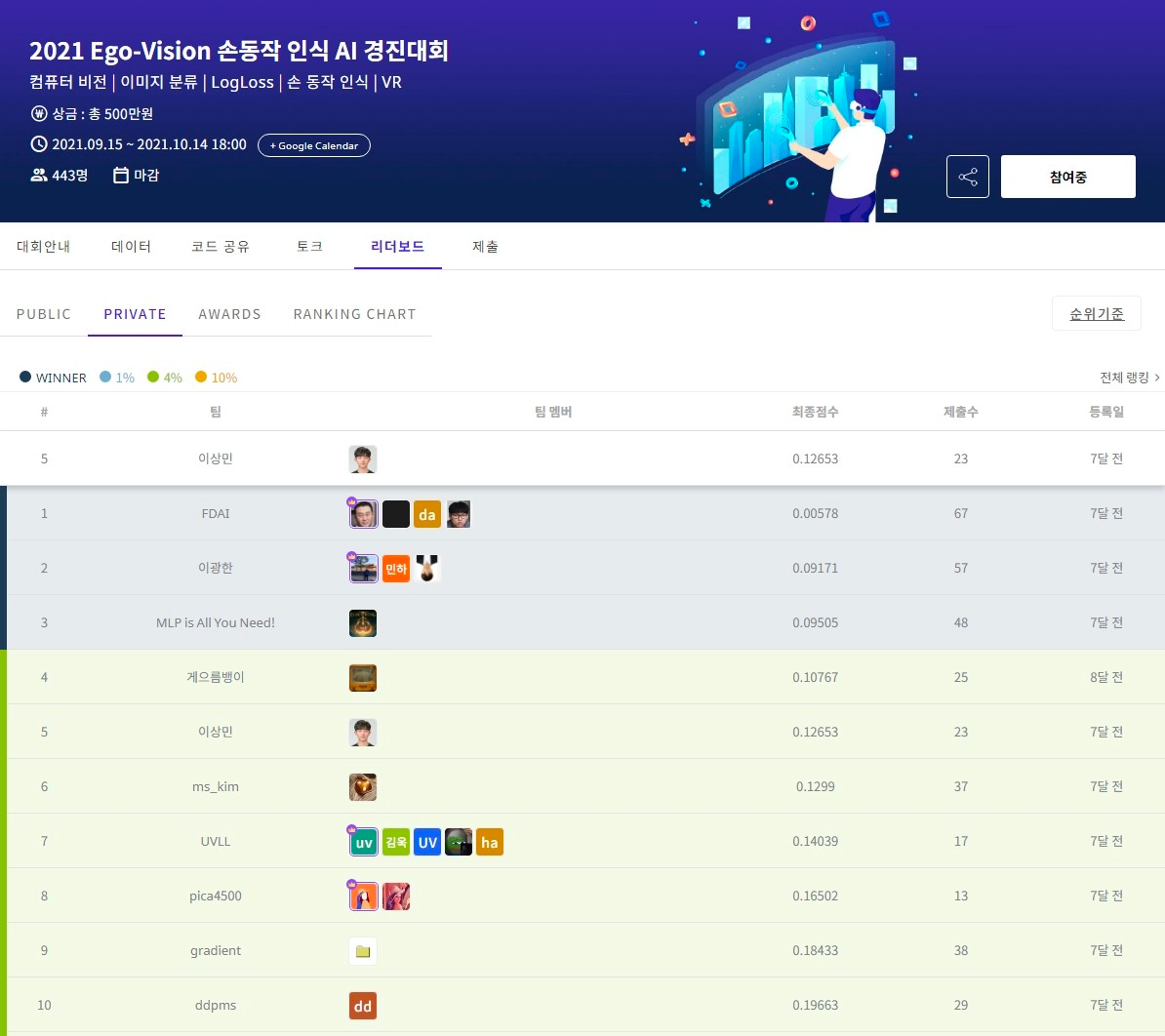

[Private 5위, Public점수 : 0.16419, DenseNet161

2021 Ego-Vision 손동작 인식 AI 경진대회

dacon.io](https://dacon.io/competitions/official/235805/codeshare/3596?page=1&dtype=recent)

불필요한 정보를 제거하기 위해서 Resize, Random crop 두 가지의 Image processing을 진행하였으며, 학습 모델은 pytorch의 라이브러리로 구성된 DenseNet161 모델을 사용하였습니다.

Code

import matplotlib.pyplot as plt

import warnings

warnings.filterwarnings('ignore')

import torch, torchvision

import torchvision.transforms as transforms

import os, json, PIL

import pandas as pd

import torch.nn as nn

import torch.optim as optim

import torchvision.models as models

import numpy as np

from torch.utils.data import Dataset

from sklearn.model_selection import train_test_split

def count(output, target):

with torch.no_grad():

predict = torch.argmax(output, 1)

correct = (predict == target).sum().item()

return correct

def select_model(model, num_classes):

if model == 'densenet121':

model_ = models.densenet121(pretrained=True)

model_.classifier = nn.Linear(1024, num_classes)

elif model == 'densenet161':

model_ = models.densenet161(pretrained=True)

model_.classifier = nn.Linear(2208, num_classes)

return model_

class Baseline():

def __init__(self, model, num_classes, gpu_id=0, epoch_print=1, print_freq=10, save=False):

self.gpu = gpu_id

self.epoch_print = epoch_print

self.print_freq = print_freq

self.save = save

torch.cuda.set_device(self.gpu)

self.loss_function = nn.CrossEntropyLoss().cuda(self.gpu)

model = select_model(model, num_classes)

self.model = model.cuda(self.gpu)

self.train_losses, self.test_losses = [], []

self.train_acc, self.test_acc = [], []

self.best_acc = None

self.best_loss = None

def train(self, train_data, test_data, epochs=100, lr=0.1, weight_decay=0.0001):

self.model.train()

optimizer = optim.Adam(self.model.parameters(), lr, weight_decay=weight_decay)

for epoch in range(epochs):

if epoch % self.epoch_print == 0: print('Epoch {} Started...'.format(epoch+1))

for i, (X, y) in enumerate(train_data):

X, y = X.cuda(self.gpu), y.cuda(self.gpu)

output = self.model(X)

loss = self.loss_function(output, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (epoch % self.epoch_print == 0) and (i % self.print_freq == 0):

train_acc = 100 * count(output, y) / y.size(0)

test_acc, test_loss = self.test(test_data)

if self.save and ((self.best_acc == None) or (self.best_acc < test_acc) or (test_loss < self.best_loss)):

torch.save(self.model.state_dict(), '{}_{}.pt'.format(epoch, i))

self.best_acc = test_acc

self.best_loss = test_loss

print('Best Model Saved')

self.train_losses.append(loss.item())

self.train_acc.append(train_acc)

self.test_losses.append(test_loss)

self.test_acc.append(test_acc)

print('Iteration : {} - Train Loss : {:.6f}, Test Loss : {:.6f}, '

'Train Acc : {:.6f}, Test Acc : {:.6f}'.format(i+1, loss.item(), test_loss, train_acc, test_acc))

print()

def test(self, test_data):

correct, total = 0, 0

losses = []

self.model.eval()

with torch.no_grad():

for i, (X, y) in enumerate(test_data):

X, y = X.cuda(self.gpu), y.cuda(self.gpu)

output = self.model(X)

loss = self.loss_function(output, y)

losses.append(loss.item())

correct += count(output, y)

total += y.size(0)

self.model.train()

return (100*correct/total, sum(losses)/len(losses))

data_dir = './train/'

imgs, labels = [], []

for num in sorted(os.listdir(data_dir)):

with open(data_dir + '{}/{}.json'.format(num, num), 'r') as j:

temp = json.load(j)

for info in temp['annotations']:

imgs.append(data_dir + '{}/{}.png'.format(num, info['image_id']))

labels.append(temp['action'][0])

label_info = {label:i for i, label in enumerate(sorted(set(labels)))}

train_imgs, val_imgs, train_labels, val_labels = train_test_split(imgs, labels, random_state=0, stratify=labels)

class TrainDataset(Dataset):

def __init__(self, transform=None):

self.imgs = train_imgs

self.labels = train_labels

self.label_info = label_info

self.transform = transform

def __getitem__(self, idx):

img = PIL.Image.open(self.imgs[idx]).convert('RGB')

if self.transform: img = self.transform(img)

label = self.label_info[self.labels[idx]]

return img, label

def __len__(self):

return len(self.imgs)

class ValDataset(Dataset):

def __init__(self, transform=None):

self.imgs = val_imgs

self.labels = val_labels

self.label_info = label_info

self.transform = transform

def __getitem__(self, idx):

img = PIL.Image.open(self.imgs[idx]).convert('RGB')

if self.transform: img = self.transform(img)

label = self.label_info[self.labels[idx]]

return img, label

def __len__(self):

return len(self.imgs)

batch_size = 16

train_transform = transforms.Compose([

transforms.Resize(256), transforms.RandomCrop((224, 400)),

transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])

val_transform = transforms.Compose([

transforms.Resize(256), transforms.CenterCrop((224, 400)),

transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])

train_dataset = TrainDataset(transform=train_transform)

val_dataset = ValDataset(transform=val_transform)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

val_loader = torch.utils.data.DataLoader(val_dataset, batch_size=batch_size, shuffle=False)

model = Baseline(model='densenet161', num_classes=len(train_dataset.label_info), print_freq=5, save=True)

epochs = 10

lr = 0.0005

weight_decay = 0.00001

model.train(train_loader, val_loader, epochs=epochs, lr=lr, weight_decay=weight_decay)

label_fontsize = 25

plt.figure(figsize=(20, 10))

train_lossline, = plt.plot(model.train_losses, label='Train')

test_lossline, = plt.plot(model.test_losses, color='red', label='Test')

plt.legend(handles=[train_lossline, test_lossline], fontsize=20)

plt.xlabel('Step', fontsize=label_fontsize)

plt.ylabel('Loss', fontsize=label_fontsize)

plt.show()

plt.figure(figsize=(20, 10))

train_accline, = plt.plot(model.train_acc, label='Train')

test_accline, = plt.plot(model.test_acc, color='red', label='Test')

plt.legend(handles=[train_accline, test_accline], fontsize=20)

plt.xlabel('Step', fontsize=label_fontsize)

plt.ylabel('Acc', fontsize=label_fontsize)

plt.show()

model = Baseline(model='densenet161', num_classes=157)

model.model.load_state_dict(torch.load('./8_225.pt'))

model.model.eval()

test_transform = transforms.Compose([

transforms.Resize(256), transforms.CenterCrop((224, 400)),

transforms.ToTensor(), transforms.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225])])

data_dir = './test/'

predictions = []

with torch.no_grad():

for num in sorted(os.listdir(data_dir)):

with open(data_dir + '{}/{}.json'.format(num, num), 'r') as j:

temp = json.load(j)

imgs = []

for info in temp['annotations']:

img_dir = data_dir + '{}/{}.png'.format(num, info['image_id'])

img = PIL.Image.open(img_dir).convert('RGB')

img = test_transform(img)

imgs.append(img)

imgs = torch.stack(imgs).cuda()

prediction = torch.nn.Softmax(dim=1)(model.model(imgs))

prediction = torch.mean(prediction, dim=0)

if torch.sum(prediction) != 1: print(torch.sum(prediction))

predictions.append(prediction.cpu().numpy())

print(len(predictions))

print(len(predictions[0]))

sample_submission = pd.read_csv('./sample_submission.csv')

sample_submission.iloc[:,1:] = predictions

sample_submission.to_csv('./densenet161_8_225.csv', index=False)

sample_submission.iloc[:,1:]Result

728x90

'대회' 카테고리의 다른 글

| 데이콘 Basic 서울 랜드마크 이미지 분류 경진대회 (3등 / 420명, Top 0.71%) (0) | 2024.03.30 |

|---|---|

| 데이콘 Basic 수화 이미지 분류 경진대회 (8등 / 418명, Top 1.91%) (0) | 2024.03.30 |

| 월간 데이콘 컴퓨터비전 이상치 탐지 알고리즘 경진대회 (28등 / 481팀, Top 5.82%) (0) | 2024.03.30 |